Method

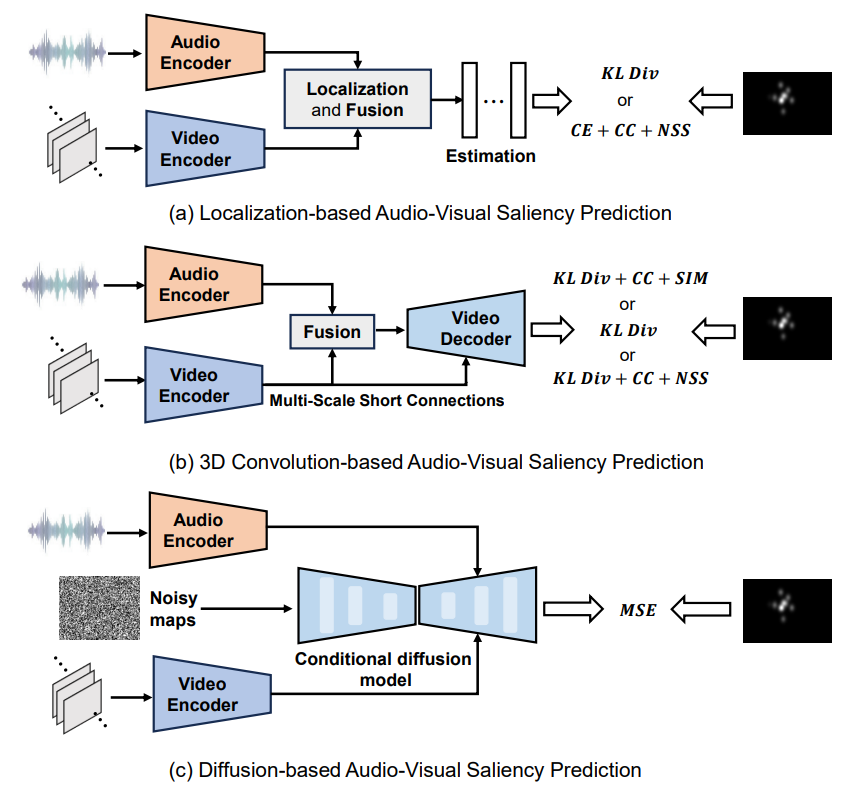

Both the localization-based and 3D convolution-based methods use tailored network structures and sophisticated loss functions to predict saliency areas.

Differently, our diffusion-based approach is a generalized audio-visual saliency prediction framework using simple MSE objective function.

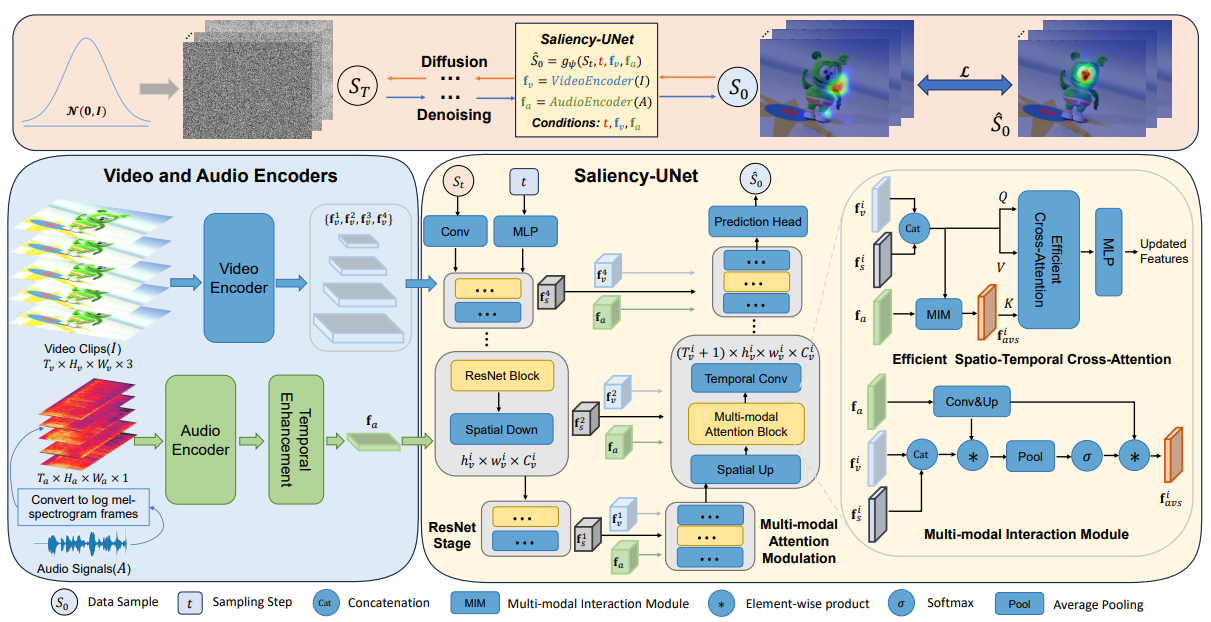

The proposed DiffSal contains Video and Audio Encoders as well as Saliency-UNet.

The former is used to extract multi-scale spatio-temporal video features and audio features from image sequences and corresponding audio signals.

By conditioning on these semantic video and audio features, the latter performs multi-modal attention modulation to progressively refine the ground-truth

saliency map from the noisy map.